Did you know that over 60% of major AI content failures could have been prevented with proper human oversight? In an era where artificial intelligence is increasingly making decisions that shape our lives, the lack of effective human supervision can lead to disastrous financial, ethical, and reputational consequences. This article uncovers why human oversight in AI is not just an afterthought but a non-negotiable safeguard for organizations aiming to ensure responsible, trustworthy, and robust AI systems. Read on to discover how oversight protects you from costly mistakes, builds public trust, and aligns AI with our deepest values.

Why Human Oversight in AI Systems Has Become a Crucial Safeguard Today

As AI systems become more sophisticated, their integration into critical sectors—such as healthcare, finance, and law enforcement—has revealed both the transformative potential and the potential risks of automation. The need for human oversight in AI is becoming increasingly vital to manage the unpredictability of these systems and ensure that AI decisions align with human values and regulatory standards. Unlike the earlier days of artificial intelligence, where humans primarily designed and monitored AI models , today's oversight responsibilities extend to reviewing AI-generated outputs, intervening in automated processes, and ensuring ongoing accountability.

The AI Act and other emerging regulations make it clear that oversight in AI isn't a luxury—it’s essential for preventing automation bias and unintended consequences. With high-stakes applications, even a single error in an AI system can lead to financial loss, reputational ruin, or harm to natural persons. As AI becomes embedded in everyday life, ensuring that AI adheres to fundamental rights and ethical considerations increasingly falls on human shoulders. Organizations that overlook this new oversight imperative are not only risking compliance penalties but also eroding public trust in their brands and digital offerings.

"According to recent studies, over 60% of major AI content failures could have been prevented with proper human oversight."

Defining Human Oversight in AI: The Role of Humans in Safe, Ethical AI Systems

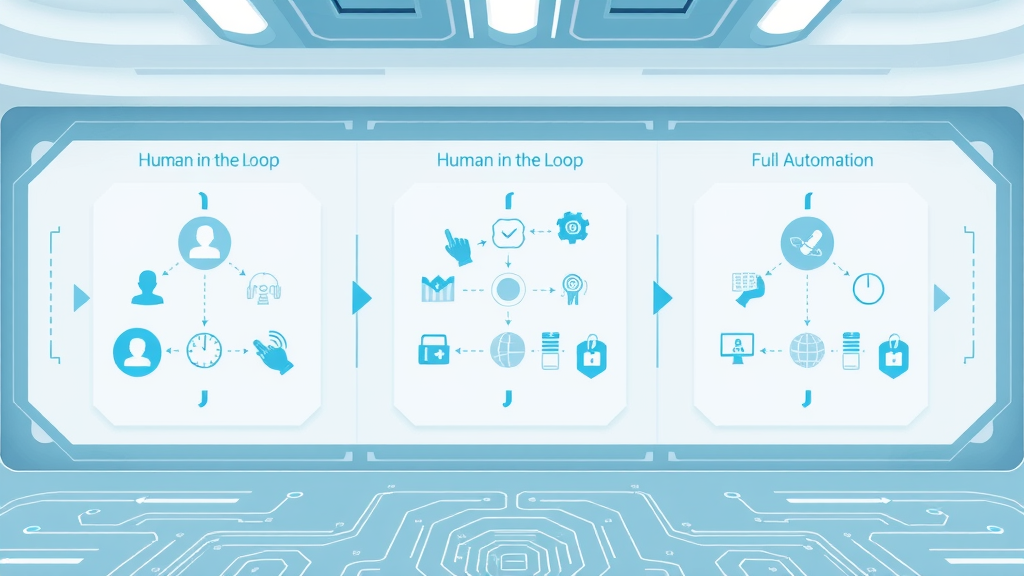

Human oversight in AI refers to the practice of having informed, responsible humans involved at various stages of the AI system lifecycle. Unlike full automation—where AI operates independently—human oversight entails deliberate checks and interventions by human reviewers to guide, monitor, and correct AI behavior. This oversight serves as a vital counterbalance, ensuring that AI models don’t operate as black boxes but remain transparent, fair, and aligned with societal norms.

- Explanation of human oversight and its importance in the context of artificial intelligence: Oversight helps AI models remain accountable and ensures that decision-making supports legal, ethical, and social priorities. Without it, organizations risk deploying AI systems that might perpetuate bias or violate data governance standards.

- Key distinctions between oversight in AI and full automation: While full automation values speed and efficiency, human oversight emphasizes accuracy, fairness, and compliance. The role of human intervention is especially critical for high-risk AI applications, which may generate outputs with profound societal implications.

- Examples of where human oversight has stopped AI content errors: Major tech firms routinely employ review teams to catch and correct AI-generated misinformation—such as false news articles or discriminatory hiring algorithms—before they reach the public, thus preventing costly legal and ethical disasters.

Ultimately, human oversight is about embedding continuous human judgment within AI workflows. This helps prevent ethical lapses and ensures critical oversight mechanisms remain robust as AI technologies evolve.

What Readers Will Gain from Understanding Human Oversight in AI

By exploring the nuances of human oversight in AI , you’ll gain more than just a theoretical understanding: you’ll unlock practical strategies for managing risk, enhancing AI ethics, and building long-term organizational trust. Mastery in this area separates responsible innovators from reckless adopters.

- Deeper knowledge of why oversight is essential in managing risk with AI systems: You’ll discover how effective oversight mechanisms directly curb automation bias, tackle potential risks , and protect fundamental rights in high-stakes environments.

- Tools and frameworks for applying human oversight: We’ll introduce practical frameworks and actionable steps to ensure that AI governance includes robust human review, supported by data documentation and audit trails.

- Awareness of the impact of human oversight on AI ethics and public trust: Learn how oversight builds trust with users and regulators alike, serving as the ethical backbone for responsible AI development and deployment.

| Model | Level of Human Involvement | Benefits | Risks | Examples |

|---|---|---|---|---|

| Full Automation | No routine human involvement after system deployment | Efficiency, speed, reduced labor costs | Automation bias, loss of control, ethical blind spots | Algorithmic trading, automated chatbots without review |

| Human-in-the-Loop | Humans directly intervene, approve, or modify AI outputs | High accuracy, accountability, ethical compliance | Slower decision cycles, resource-intensive | Medical diagnosis assistance, content moderation |

| Human-on-the-Loop | Humans monitor outputs and intervene only if necessary | Balance of efficiency and oversight, scalable | Requires strong monitoring tools, possible delayed responses | Credit scoring, law enforcement surveillance with audits |

AI System Risks: Where Human Oversight in AI Prevents Costly Mistakes

The lack of human oversight in AI can unleash a cascade of issues—from financial losses to reputational damage—underscoring why AI ethics and risk management must be central themes for organizations. Real-world failures remind us that AI models, no matter how advanced, are not immune to making mistakes that can deeply affect individuals and society.

- Bias and discrimination risks in generative AI outputs: Without oversight, generative AI can produce biased or offensive outputs, perpetuating harmful stereotypes and inviting public backlash. Human reviewers play a critical role in identifying and mitigating these issues before they escalate.

- Misinformation and harmful content: Content-producing AI systems may inadvertently generate false or misleading information, risking harm to users or communities. Oversight mechanisms ensure rigorous fact-checking and content validation, preventing potential missteps from reaching the public.

- Regulatory breaches under the EU AI Act and similar frameworks: New laws, such as the AI Act in the European Union, mandate oversight for high-risk AI applications. Failure to comply can result in substantial penalties for organizations and even suspension of AI models.

- Ethical lapses and reputational damage: AI failures that escape detection can erode trust, cause public outrage, and initiate costly litigation. Human oversight acts as a crucial safeguard, catching unintended consequences early and preserving both user safety and corporate reputation.

By investing in robust oversight structures, developers and deployers of AI technologies can greatly reduce the risk of automation bias and ensure their systems are both reliable and aligned with fundamental rights.

The Role of Human Oversight in AI Ethics and Risk Management

Interaction Between Human Oversight and Generative AI: Moral and Legal Necessities

As generative AI becomes more prevalent, its unique capabilities to create content, images, and even decisions at scale bring significant ethical considerations. Human oversight in AI ensures generative AI models operate within moral and legal boundaries, especially when outputs may impact individuals' rights or present public risks. Effective oversight mechanisms enable organizations to meet evolving standards for AI governance and to address emerging challenges head-on.

In high-risk applications—such as healthcare diagnostics or automated hiring—requiring human review of generated outputs is both a moral and legal necessity under frameworks like the EU AI Act. This accountability ensures that organizations remain responsible for their AI systems, rather than offloading blame onto automated processes. The interaction between oversight and generative AI is about integrating human judgment at key intervention points—balancing innovation with safety, and upholding responsible AI principles.

Human Oversight Frameworks for AI Systems: Balancing Innovation and Accountability

Building effective human oversight in AI requires structured frameworks that blend technological innovation with organizational accountability. These frameworks typically include clear documentation protocols, oversight mechanisms for auditing decisions, and cross-disciplinary collaboration spanning ethics, legal, and technical domains. Such comprehensive systems allow organizations to sustain innovation without sacrificing transparency, fairness, or compliance.

With the AI Act and similar legislation taking hold, frameworks now demand demonstrable evidence of oversight—such as training logs, review records, and impact assessments. This risk management approach ensures that every layer of the AI system is subject to human scrutiny. Companies leading on AI ethics understand that investing in robust oversight both reduces potential risks and supports long-term business sustainability.

"The AI Act now requires demonstrable human oversight for high-risk AI systems, shifting accountability back to organizations and their leadership."

Human Oversight in AI Content Production: Lessons from Costly Public Failures

Some of the most publicized AI failures—ranging from offensive chatbot interactions to discriminatory algorithmic content—stem from a lack of appropriate human oversight. By examining these high-profile cases, organizations can extract important lessons to strengthen their own AI governance and avoid similar ethical pitfalls.

- Real-world case studies where lack of oversight in AI led to financial or ethical disasters: Notable incidents, such as AI-powered recruitment tools rejecting qualified candidates due to inherent bias, highlight the need for human involvement to catch and correct AI model errors. Without oversight, these failures resulted in lawsuits, public apologies, and damaged reputations.

- Debate: Is more human control always better? While some advocate for maximum intervention to mitigate risks, others warn of excessive bureaucracy stifling innovation. The challenge lies in finding an optimal balance where human oversight enables both safe operation and continued progress of AI technologies.

These case studies reinforce that human oversight is not just about avoiding the next scandal but ensuring responsible AI that upholds both ethical and business values.

Challenges and Limitations of Human Oversight in AI

Human oversight in AI isn’t without challenges; organizations must navigate multiple barriers while working to establish effective oversight frameworks. These hurdles can create friction, slow development, and occasionally lead to over-reliance on automated “oversight” mechanisms themselves. Understanding these obstacles is critical for leaders seeking to implement oversight without sacrificing efficiency or innovation.

- Time and cost constraints: Integrating human oversight in AI operations often increases time-to-market and operational costs. Manual reviews, interventions, and reporting processes require skilled personnel and sustained investment, making it a difficult balance for resource-limited teams.

- Skill and training gaps in oversight teams: Effective oversight relies on staff with specialized skills in AI ethics, technical assessment, and regulatory compliance. Organizations frequently struggle with knowledge gaps—underscoring the need for ongoing education to ensure meaningful human review.

- Potential for over-reliance on automated 'oversight' tools: Ironically, some organizations deploy software to oversee their AI, risking a false sense of security. True oversight requires human judgment; automated systems should support—not replace—critical human intervention, especially for high-risk or ambiguous scenarios.

Tackling these challenges requires a proactive mindset. By prioritizing transparency, regular training, and a clear delineation of oversight responsibilities, organizations can maximize the benefits and minimize the downsides of human involvement in AI.

Innovative Approaches for Human Oversight in Generative AI

AI System Interfaces Built for Human Oversight: Design, Transparency, and Control

For human oversight in AI to be effective, the interfaces between people and AI systems must be transparent and user-friendly. Modern AI models now include tools that highlight decision pathways, flag ambiguous outcomes, and prompt reviewers for feedback. These systems are designed with transparency at their core, supporting human auditors in recognizing automation bias or other potential errors.

Enhanced control features—like “rollback,” audit trails, or explanation modules—empower oversight teams to intervene meaningfully when an AI system outputs questionable results. By investing in better tools, organizations enable human oversight that is nimble, reliable, and actionable across a variety of use cases, from content moderation to financial decisioning.

Continuous Learning: Training Humans and AI Systems Together

Successful oversight is an evolving process, requiring both people and AI models to keep learning. Regular training programs for oversight teams ensure human reviewers are up-to-date on AI ethics, system capabilities, and risk management strategies. At the same time, feedback loops from oversight activities help AI models learn from their mistakes—creating smarter, more trustworthy systems.

This synergy between human training and AI learning is the backbone of responsible AI governance. As both sides improve, organizations are better equipped to handle complex scenarios and sophisticated adversarial attacks, ensuring that artificial intelligence continues to serve the public good.

People Also Ask

What is human oversight in generative AI?

Human oversight in generative AI means that trained individuals actively monitor, review, and sometimes correct content or decisions made by AI systems that generate text, images, or data. Oversight responsibilities can include approving outputs before publication, flagging potential risks in content, and intervening when the AI model produces questionable material. Practical examples abound: from editorial review of AI-authored articles to risk assessments of AI-generated investment recommendations, strong oversight reduces the likelihood of bias and misinformation reaching end users.

What is the meaning of human oversight?

Human oversight, in the context of AI, describes the responsibility and active involvement of people in the monitoring and management of automated systems. Across various AI system scenarios, this involves reviewing AI outcomes, setting ethical guidelines, and intervening to prevent or correct errors. Oversight is foundational to robust AI governance , ensuring that AI remains transparent, accountable, and aligned with social and legal standards.

How can humanity regulate AI?

Regulating AI requires coordinated international and local efforts, leveraging both legal frameworks (like the AI Act or US draft bills) and practical enforcement through robust human oversight practices. Effective regulation hinges on mechanisms such as independent auditing, transparency requirements, and mainstreaming human review into every stage of ai system development and deployment. By combining clear rules with proactive oversight, humanity can foster responsible AI innovation while protecting public interests.

What is human intervention in AI?

Human intervention is the act of directly correcting, adjusting, or halting AI processes in real time—distinguished from oversight, which also encompasses ongoing supervision, monitoring, and policy-setting. In practice, this could be a human pausing a misfiring automated system or requesting a manual review for suspicious outputs. Both intervention and oversight are critical for risk management and the prevention of errors or harms caused by unintended consequences in AI technologies .

Best Practices and Proactive Strategies for Human Oversight in AI

To maximize the impact of human oversight in AI , forward-thinking organizations adopt a multilayered approach. These best practices not only ensure AI compliance with legal and ethical standards but also foster innovation by creating accountable, transparent processes. Below are some key strategies that industry leaders employ today:

- Implementing checks and review points in AI systems: Embedding routine human reviews—at both input and output stages—helps catch errors, reduce bias, and ensure that organizational standards are consistently met.

- Training for oversight teams: Regular, targeted education for oversight personnel strengthens their abilities to spot ethical issues and technical glitches, ensuring human review is both competent and effective.

- Documentation and audit trails to ensure transparency and accountability: Detailed logs of oversight decisions create robust evidence for compliance audits and build trust with stakeholders, regulators, and the public.

- Collaboration across disciplines: ethics, legal, technical: Bringing together experts from multiple backgrounds ensures holistic risk management, closing the gaps that single-discipline teams might overlook.

By institutionalizing these best practices, developers and deployers can build trust with users and regulators, maintaining a positive reputation even as AI adoption accelerates.

Frequently Asked Questions (FAQs) on Human Oversight in AI Systems

- What are examples of effective human oversight in AI content moderation? Real-world examples include social media platforms where human moderators review suspicious automated content, financial institutions where experts audit AI-generated loan recommendations, and healthcare professionals double-checking AI diagnostic outputs. These interventions help ensure that outputs are fair, accurate, and compliant with ethical considerations.

- Can human oversight fully eliminate AI risks? No oversight framework is perfect. While human involvement can significantly reduce risks—such as bias, misinformation, or regulatory breaches—it cannot guarantee total safety. Continuous learning and adaptive oversight are crucial for responding to evolving threats and ensuring that AI technologies remain under meaningful human control.

- How does oversight differ in traditional vs generative AI? Traditional AI often relies on rule-based outputs, so oversight focuses on verifying data quality and decision logic. In generative AI, oversight includes more nuanced validation of creative or complex outputs—ensuring that content is not only factually correct but also ethical and audience-appropriate.

- What are the costs associated with establishing human oversight frameworks? Costs include training oversight teams, investing in audit infrastructure, developing transparent interface tools, and potentially slowing down AI deployment timelines. However, these investments are offset by reduced risk of costly public failures and long-term regulatory compliance.

Pulling It Together: The Enduring Value of Human Oversight in AI Systems

"Human oversight remains a non-negotiable safeguard as AI systems shape critical decisions and influence society at scale."

Embrace human oversight in AI : Embed diverse review teams, invest in training and transparent frameworks, and regularly audit AI content to defend against costly errors and ethical lapses.

Incorporating human oversight into AI systems is essential to ensure ethical decision-making, maintain accountability, and mitigate potential risks. The European Union’s AI Act emphasizes this by requiring high-risk AI systems to be designed for effective human supervision, particularly in sectors like healthcare and public safety, where errors can have significant consequences. ( artificialintelligenceact.eu ) Additionally, integrating ethical AI frameworks and Human-in-the-Loop (HITL) systems ensures that AI technologies supplement rather than replace human judgment, maintaining a balance between leveraging AI capabilities and preserving human control. ( orhanergun.net ) By exploring these resources, you can gain a deeper understanding of the critical role human oversight plays in developing responsible and trustworthy AI systems.

Add Row

Add Row  Add

Add

Write A Comment